For the past 2.5 months I have been building a progressive web app called OpenPriceData.com (a.k.a CPIPal.com, a.k.a. OpenPriceHistory.com, still haven’t really decided what sounds best, wdyt?). I did a little summer research on Computer Vision models during undergraduate CS, but am far from an expert. So, what does a software developer do when faced with a need for many types of image processing? Turn to existing models. Specifically, I need to:

Parse store information and prices and names of items from a receipt.

Detect UPC/EAN barcodes of those items.

Infer product information (brand, weight/volume, catagory) from an item picture.

With those needs in mind (mostly the receipt use case, and similar but not fully TBD on the other two), here is my take on the state of vision and OCR models.

AI Vision Models

Thinking it would be super simple to just send API calls with base64 images and some prompt requesting a certain structure back for parsed data, I did a quick test of Anthropic (Claude 3.5 Sonnet) and OpenAI (GPT 4o).

Claude:

4o:

Out of the box, they both do surprisingly well, actually!

As in, they both get ~90% of the items/prices right. The problem is, when mistakes occur, they are major. Claude hallucinates a product text identifier when it can't figure one out, and 4o hallucinates some prices. The hallucinations are based on vibes, detached from underlying text. This is no problem at all for inferring information from product labels, and might actually be an asset, making it the winner for that use case. But for receipt text, if it’s not exact, it’s wrong.

They're also both the most expensive of all the real options available. I learned the cheaper and more "proper" way of doing receipt parsing is running more specialized OCR models. The two I tried out are Tessaract and EasyOCR.

Base Optical Character Recognition

The problem here, is that out of the box, they're both very dumb. By out of the box, I mean with out any fine tuning or other post-training adjustments, considering both projects state they are already very well optimized with receipts in mind. I did do the standard basic greatest hits of image processing when applicable - rescaling, grayscaling, rotating, and context hinting.

Here is the tessaract result after image processing:

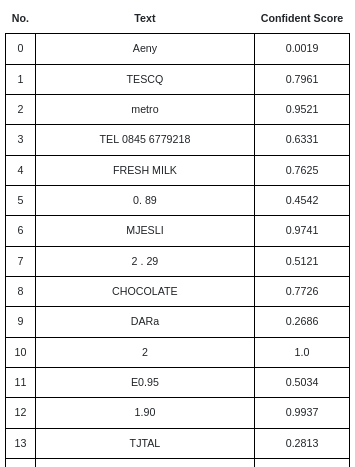

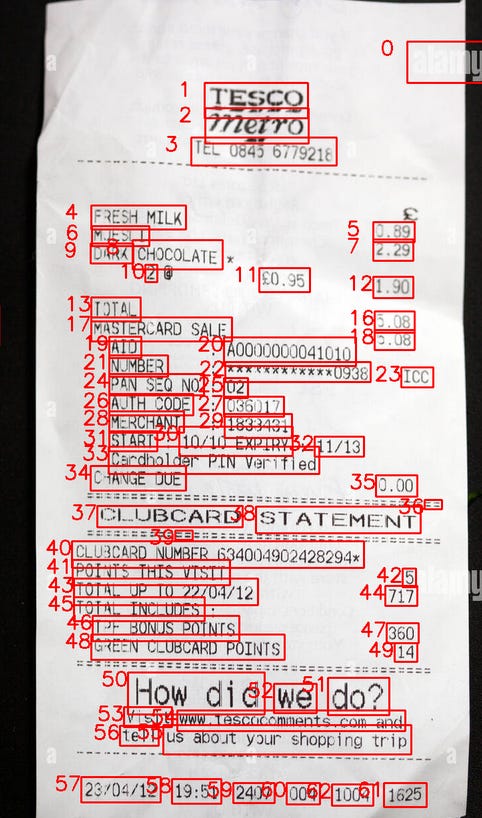

And here’s the EasyOCR result using the online demo, which appears to do its own image processing:

Overall, there’s just too great a distance between what these OCR models produce, and the level of exactness I need in my app’s DB in order for data transformation to be consistently fruitful at this stage.

Cloud Vision API (Google)

one billing and credential rabbit hole later...

There's another service I tried, but almost didn't, because of how much of a pain it is to enter the ecosystem. I'm talking about Google (specifically Cloud Vision API, though it was too hard to figure out to use that one, and their AI "solution generator" steered me wrong!)

Have I mentioned it’s really hard to get set up, with needless barriers aplenty? Why does Google feel so beauracratic? It’s really sad to see such dissuasion towards new users, considering what I’m about to say next…

Maybe it's my sunk cost bias showing, but it's actually very impressive! It one-shot the test image with 99+% accuracy! And it looks to be the most extensible now that I’m in the ecosystem, showing the most promise for barcode detection as well.

So, what am I to do?

Google Cloud Vision API is good enough to be serviceable to me, right now, as is. Until I get my app built out and magically have enough free engineering hours to roll my own ~everything~. So I'm using it.

But, as a matter of principle as a software dev I don't want to lock myself into a Google product (or any third-party service for that matter). This dilemma begs many questions, and I take my shot at answering a few of them now.

Q&A

Is it worth it to start back from EasyOCR, and train and fine-tune it for specific receipt formats? Maybe build a db for fuzzy matching?

At some point, yes, but only if the site takes off and I believe building a better model is possible.

Will generalist AI vision models get good enough to make bare OCR irrelevant?

I think so. The current vibe-y hallucinations are unacceptable for truth-critical systems, but I suspect we'll soon see models fall-back on OCR if low-confidence. & then soon after, no longer need to.

In sharing receipt data, why have the intermediate vision step anyways?

This is the most important question IMO. QR code summaries, receipts being emailed to payment card emails on file, or even point of sale systems talking directly to budgeting apps, for example, are all less lossy, more efficient, and easy to make more accessible. Computer-Computer interaction seems like the obvious necessity in this case, but no one's building it. So, why not me? (Why not you!)